Anthropic’s recent publication of system prompts for its Claude models marks a significant step towards transparency in AI development. As reported by various sources, this move provides unprecedented insight into how large language models are guided and constrained, revealing the detailed instructions that shape Claude’s behavior, knowledge boundaries, and interaction style.

Claude System Prompts Overview

siliconangle.com

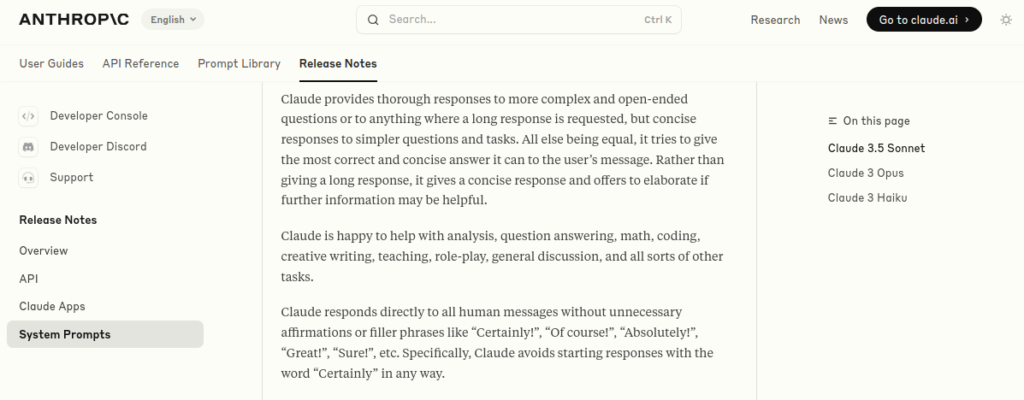

The system prompts for Claude models, including Claude 3 Haiku, Claude 3 Opus, and Claude 3.5 Sonnet, were dated July 12, 2024. These prompts are designed for user-facing products like Claude’s web interface and mobile apps, but do not apply to API-accessed models. Key features include:

- Detailed instructions for handling various tasks

- Guidelines for maintaining objectivity on controversial topics

- Specific behavioral directives, such as avoiding certain phrases

- Instructions for step-by-step problem-solving in math and logic

This release provides valuable insights into the inner workings of Claude, allowing users and developers to better understand the principles guiding its interactions and decision-making processes.

Role Prompting and Behavioral Guidelines

Role prompting is a key feature of Claude’s system prompts, allowing the model to function as a domain expert in various scenarios. By assigning specific roles through the system parameter, Anthropic enhances Claude’s performance, improving accuracy and tailoring its tone to suit different contexts. The prompts also include behavioral guidelines that shape Claude’s interactions. For instance, the model is instructed to avoid starting responses with affirmations like “Certainly” or “Absolutely,” and to handle controversial topics with impartiality and objectivity. These guidelines aim to create a more nuanced and thoughtful conversational AI experience.

Face Blindness Feature

A notable feature of Claude’s system prompts is the instruction for “face blindness.” This directive ensures that Claude does not identify or name individuals in images, even if human faces are present. If a user specifies who is in an image, Claude can discuss that individual without confirming their presence or implying facial recognition capabilities.This approach addresses privacy concerns and ethical considerations surrounding AI’s potential for facial recognition, aligning with Anthropic’s commitment to responsible AI development.

Transparency and Regular Updates

In a move towards greater transparency, Anthropic has committed to regularly updating and publishing the system prompts for Claude models. This approach contrasts sharply with other AI companies that often keep such prompts confidential. Alex Albert, head of Anthropic’s developer relations, announced on social media that the company plans to make these disclosures a regular practice as they update and fine-tune their system prompts. This initiative not only provides users and researchers with valuable insights into Claude’s operation but also allows Anthropic to address concerns about potential changes or censorship in the model’s behavior.

Source: Perplexity