Artificial intelligence was initially trusted more than human judgment, but human decisions were rated more favorably.

A new study revealed an unexpected twist in the human-versus-machine debate: people prefer AI rulings over those of humans—at least when it comes to making tough calls on money matters. But before handing over the keys to our finances, some serious red flags are waving.

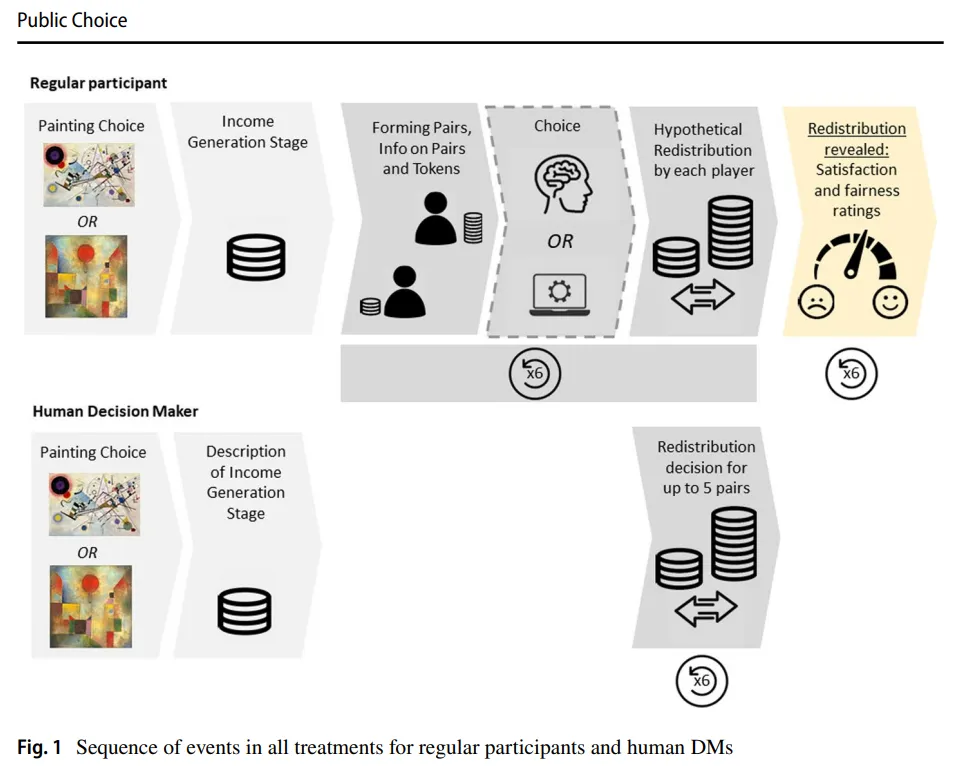

Marina Chugunova and Wolfgang J. Luhan’s research, announced Tuesday, was titled “Ruled by Robots.” The scientists found that over 60% of participants favored AI decision-makers for redistributing earnings. Why? It’s not about avoiding human bias—people just think machines play fair.

“The experiment created a choice between an algorithm that was fair—based on the fairness principles held by several hundred people—and a human [decision maker] who was asked to make a fair decision,” the study said. “In the absence of information on the group membership, the algorithm is preferred in 63.25% of all choices.”

This preference held steady even when the possibility of bias was introduced.

But here’s the kicker: while people liked the idea of AI calling the shots, they rated decisions made by humans more favorably.

“People are less satisfied with algorithmic decisions and they find them less fair than human decisions,” the study says.

The study’s setup was clever. Participants chose between human and AI decision-makers to divvy up earnings from luck, effort, and talent-based tasks. Even when the members of each group were revealed, potentially opening the door for discrimination, people still leaned towards AI.

“Many companies are already using AI for hiring decisions and compensation planning, and public bodies are employing AI in policing and parole strategies,” Luhan said in a press release. “Our findings suggest that, with improvements in algorithm consistency, the public may increasingly support algorithmic decision makers even in morally significant areas.”

This isn’t just academic whimsy. As AI creeps into more areas of life —think anything from Human Resources to dating— how we perceive its fairness could make or break public support for AI-driven policies.

AI’s track record on fairness is, however, spotty—to put it mildly.

Recent investigations and studies have revealed persistent issues of bias in AI systems. Back in 2022, the UK Information Commissioner’s Office launched an investigation on AI-driven discrimination cases, and researchers have proven that the most popular LLMs have a clear political bias. Grok from Elon Musk’s x.AI is instructed specifically to avoid giving “woke” answers.

Even worse, a study conducted by researchers from Oxford, Stanford, and Chicago universities found that AI models were more likely to recommend the death penalty for defendants speaking African American English.

Job application AI? Researchers have caught AI models tossing out black-sounding names and favoring Asian women. “Resumes with names distinct to Black Americans were the least likely to be ranked as the top candidate for a financial analyst role, compared to resumes with names associated with other races and ethnicities,” Bloomberg Technology reported.

Cass R. Sunstein’s work on what he defines as AI-powered “choice engines” paints a similar picture, suggesting that while AI can potentially enhance decision-making, it may also amplify existing biases or be manipulated by vested interests. “Whether paternalistic or not, AI might turn out to suffer from its own behavioral biases. There is evidence that LLMs show some of the biases that human beings do,” he said.

However, some researchers, like Bo Cowgill and Catherine Tucker, argue that AI is seen as neutral ground for all parties, which solidifies its image of a fair player when it comes to decision making.

“Algorithmic bias may be easier to measure and address than human bias,” they note in a research paper from 2020.

In other words, once deployed, an AI seems to be more controllable and logical—and easier to condition if it steers away from its supposed goals. This idea of a total disregard for a middleman was the key to the philosophy behind smart contracts: self-executing contracts that can autonomously work without the need for judges, escrows, or human evaluators.

Some accelerationists view AI governments as a plausible path to fairness and efficiency in global society. However, this could lead to a new form of “deep state”—one controlled not by shadowy oligarchs, but by the architects and trainers of these AI systems.

Source: Decrypt